IVRE is a natural immersive virtual environment that enables a user to instruct, collaborate and otherwise interact with a robotic system either in simulation or in real-time via a virtual proxy. The user has access to a wide range of virtual tools, for manipulating the robot, displaying information and interacting with the environment. Topics include virtual robot instruction, monitoring, and user-guided perception. Our goal is to allow for interaction with remote systems or systems that either are in hazardous surroundings, or are unsafe for physical interaction with a human.

We are interested in understanding how virtual reality can be used for robotic systems. This research will attempt to answer that question. Some hypotheses about how virtual reality can be useful for robotics are:

- Improved presence: the user can have a similar experience when interacting with the robot as if they were performing tasks in the real world. This can also involve improved situational awareness. As well, because the user can now be represented in a first person mapping to the robot, we can utilize the user’s proprioceptive to accomplish tasks in a more natural way.

- Virtual reality can allow the user to interact with information about the robot or task in a more effective way. Virtual displays can give situationally appropriate information in a visual location that has meaning; for instance, a message about the robot could be displayed on or near the robot. Also, virtual objects and tools can provide information. For instance, a virtual box can identify an object as something the robot can manipulate, or handing the robot a virtual tool now gives the robot information about how that tool works and what the robot should do with it.

- Safety: Even human-safe robots are often put in environments (such as near heavy machinery) that are themselves dangerous. This means that though the user is able to physically train a robot, the environment prevents that. Virtual reality provides the same “grab and move” programming capacity without requiring any physical contact.

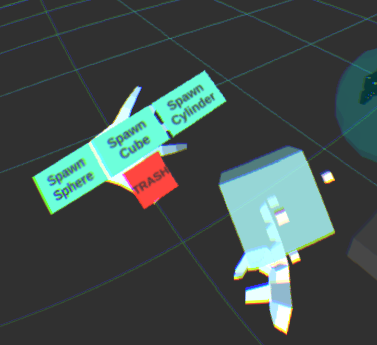

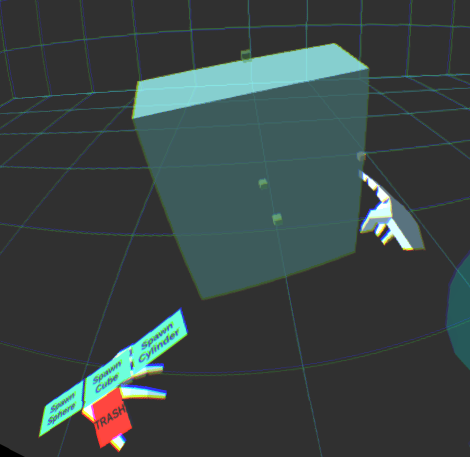

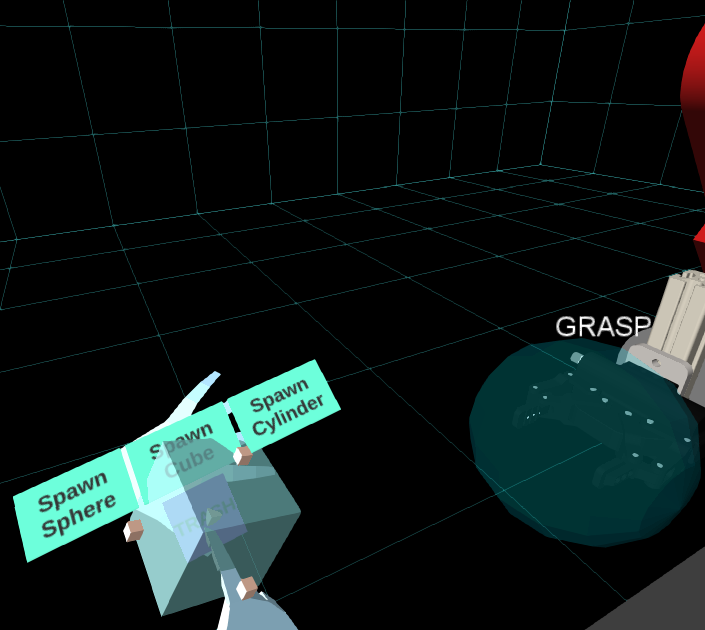

Virtual Interactive Objects (Actables)

Actable 3D virtual objects are any user-created representation of geometry in the scene. Actables can be manipulated by the user, resized, repositioned and deleted. For instance, a user might use an actable to represent a tool that the robot can use. Or, the user might use an actable for user-guided perception. For instance, if there is a ball on the nearby table the user wants the robot to grab, they can create an actable sphere to define the ball so the robot can interact with it.

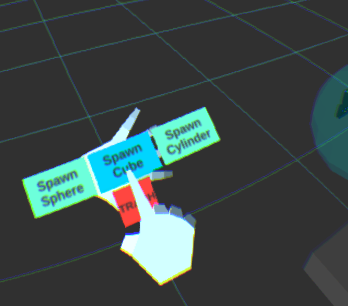

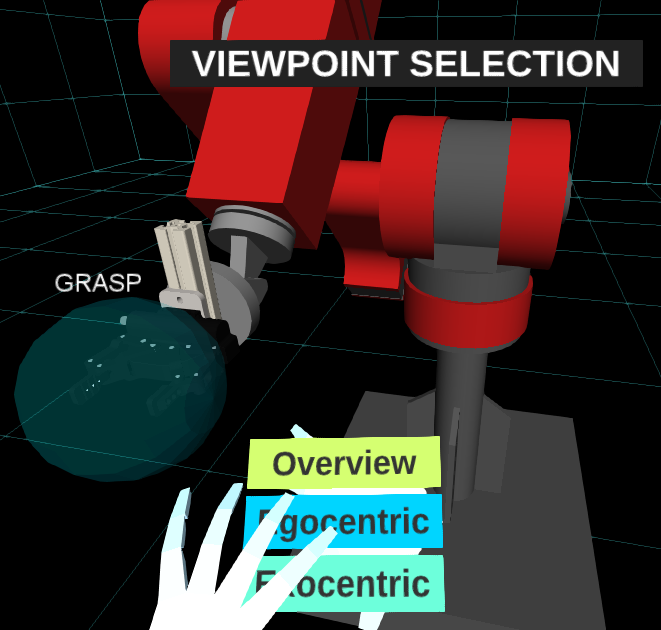

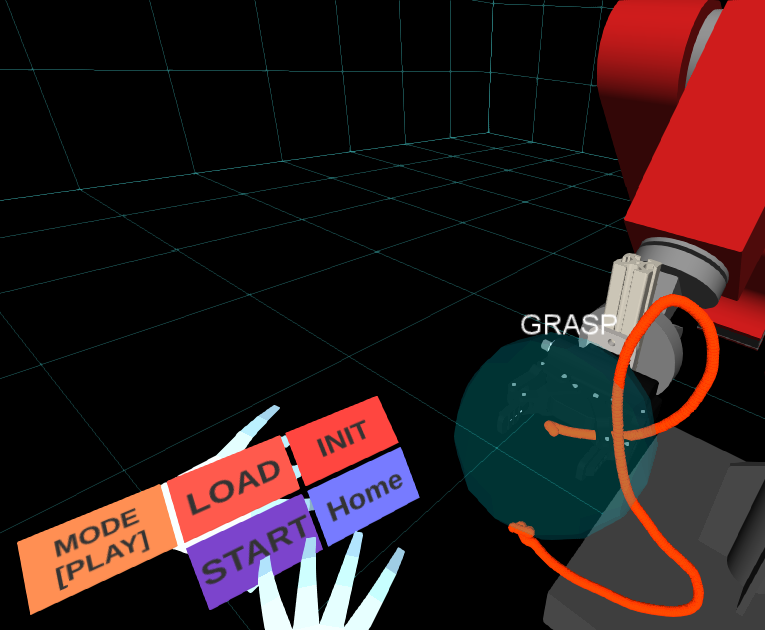

Virtual User Interfaces (VUIs)

Virtual user interfaces provide a natural way for the user to interact with the robot in discrete ways. This can include changing modes, creating or deleting resources, changing views and other functionality. We want to keep the user as “immersed” as possible in the interface, and therefore we create menu or button functionality that works without a mouse and keyboard.

People Involved:

- Faculty: Gregory Hager

- Students: Kel Guerin

Funding Sources:

- NSF National Robotics Initiative Large