Immersive Display Wall

The Balaur Display Wall is a collaborative project between the Johns Hopkins School of Computer Science and the Johns Hopkins Milton S. Eisenhower Library. It is a high resolution, 25 megapixel display system intended for research in multi-user gestural interaction with extremely large imagery and datasets. We are also interested in collaborative art and educational applications.The Balaur Wall is currently installed on the campus of Johns Hopkins University in the new Brody Learning Commons building.

Manipulating and Perceiving Simultaneously

The goal of this project is to develop a system, consisting of a robotic hand equipped with tactile sensors, capable of autonomously exploring an environment and identifying objects that have been encountered before, while manipulating the unknown objects as necessary. The ability to explore an unknown object using solely haptic information requires expansion of the state of the art both in object recognition and in manipulation, in addition to the application of simultaneous localization and mapping techniques to the haptic domain. Our approach focuses first on the adaptation of feature-based object recognition methods from the computer vision domain to haptic object recognition.

Motion Tracking and Interaction

|

|

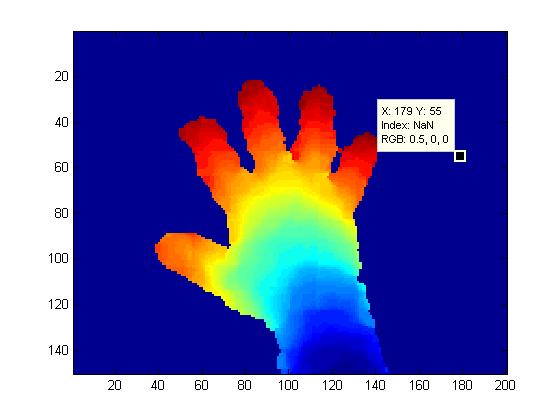

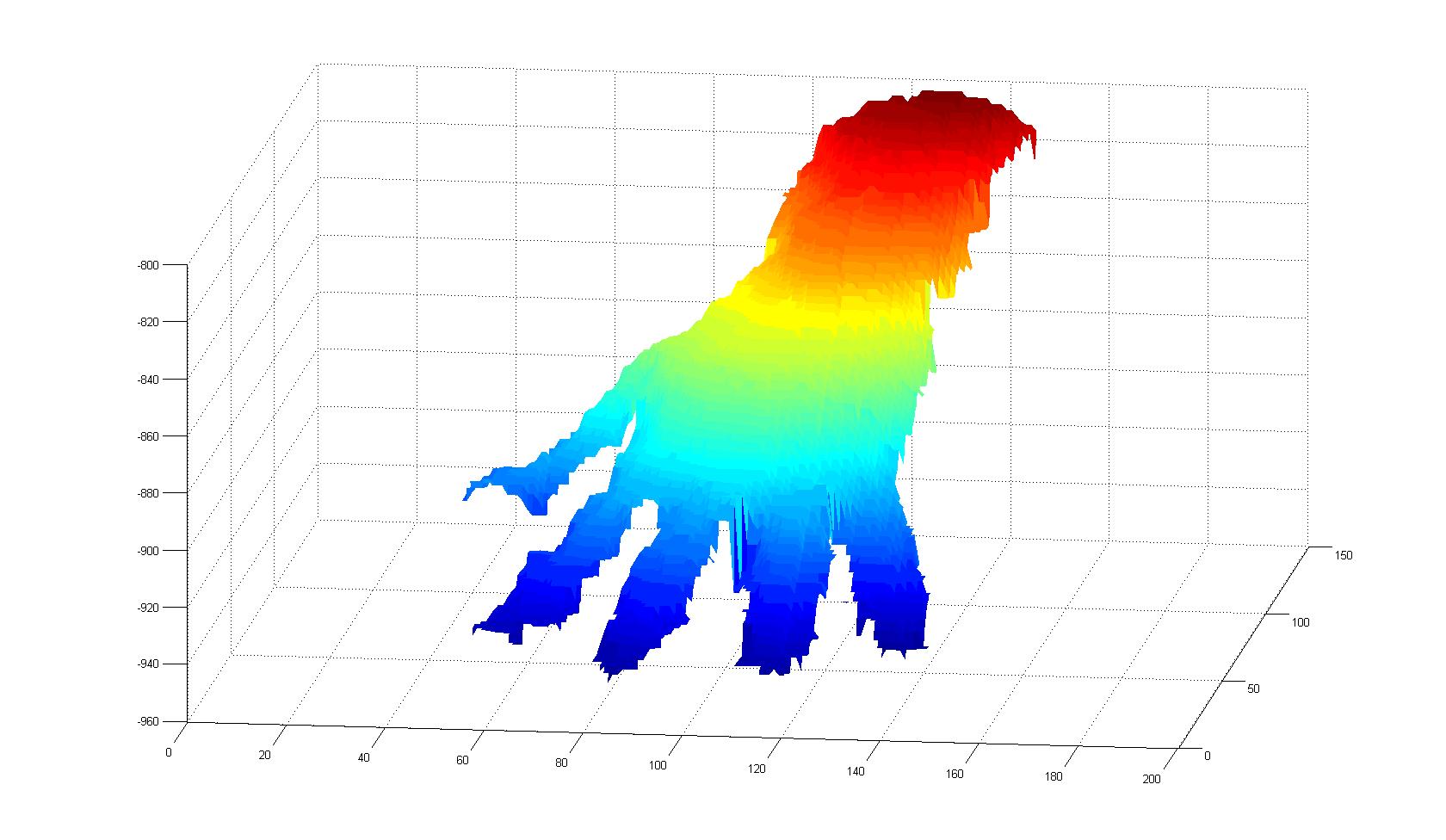

The goal of this project is to investigate novel forms of human body and hand tracking for novel computer and robotic interaction systems. We are using the Microsoft Kinect and other sensing hardware to provide robust articulated tracking of the human body and human hand. We are also exploring novel interfaces using this rich information for interacting with large data sets and controlling robots.

Context-Aware Surgical Assistance for Virtual Mentoring

Minimally invasive surgery (MIS) is a technique whereby instruments are inserted into the body via small incisions (or in some cases natural orifices), and surgery is carried out under video guidance. While presenting great advantages for the patient, MIS presents numerous challenges for the surgeon due to the restricted field of view presented by the endoscope, the tool motion constraints imposed by the insertion point, and the loss of haptic feedback. One means of overcoming some of these limitations is to present the surgeon with a registered three-dimensional overlay of information tied to pre-operative or intraoperative volumetric data. This data can provide guidance and feedback on the location of subsurface structures not apparent in endoscopic video data. Our objective is to develop algorithms for highly capable context-aware surgical assistant (CASA) robotic systems that are able to maintain a dynamically updated model of the surgical field and ongoing surgical procedure for the purposes of assistance, evaluation, and mentoring.

Micro-Surgical Assistant Workstation

The Micro-Surgical Assistant Workstation is a system which is designed to aid and augment the performance of micro-surgical tasks by a human surgeon. A micro-surgical task is simply a surgical task which is performed on such a small scale that it must be done while looking through a microscope. The goals of the project include both the augmentation of physical skill at micro-manipulation and information fusion for better situational awareness during surgery.

Endoscopic Mosaicking

With the advancement of minimally invasive techniques for surgical and diagnostic procedures, there is a growing need for the development of methods for improved visualization of internal body structures. Video mosaicking is one method for doing this. This approach provides a broader field of view of the scene by stitching together images in a video sequence.

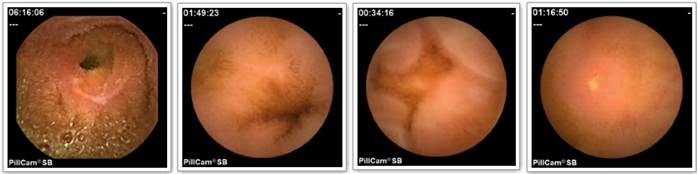

Capsule Endoscopy

Capsule endoscopy (CE) has recently emerged as a valuable imaging technology for the gastrointestinal (GI) tract, especially the small bowel and the esophagus. With this technology, it has become possible to directly evaluate the gut mucosa of patients with a variety of conditions, such as obscure gastrointestinal bleeding, Celiac disease and Crohn’s disease.

Although the use of capsule endoscopy is gaining rapidly, the evaluation of capsule endoscopic imagery presents numerous practical challenges. In a typical case, the capsule acquires 50,000 or more images over an eight-hour period. The quality of these images is highly variable due to the uncontrolled motion of the capsule itself as it moves through the GI tract, the complexity of the structures being imaged, and inherent limitations of the disposable imager. In practice, relatively few (often less than 1000) of the study images contain significant diagnostic content. As a result, it is challenging to create an effective, repeatable means for evaluating capsule endoscopic sequences.

In this NIH funded effort, we are interested in creating a tool for semi-automated, objective, quantitative assessment of pathologic findings in capsule endoscopic data, and in particular, quantitative assessment of lesions that appear in Crohn’s disease of the small bowel. We are developing statistical learning methods for performing lesion classification and assessment in a manner consistent with a trained expert.

To evaluate our methods, we have collected a substantial database of images of Crohn’s lesions together with an expert assessment of several attributes indicative of lesion severity. In addition, our database also contains a large number of other GI anomalies seen in CE. Recent publications on reduction of complexity of CE assessment by reducing the number of images that need to be examined by a clinician and statistical methods for assessment appear in the publications page.

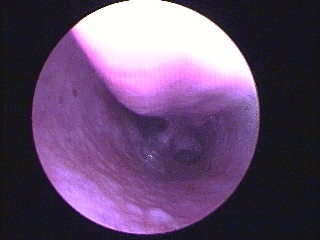

Computer Vision for Sinus Surgery

The focus of this project is to study the problem of registering an endoscopic video sequence to a preoperative CT scan with applications to sinus surgery. The main goal of this project is to be able to accurately track the tip of the endoscope in real-time using vision techniques and thus be able to determine tool tip locations within the sinus passage.

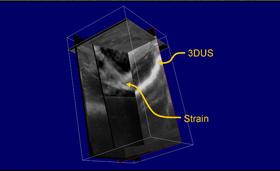

Advanced Ultrasound Imaging and Interventions

Members of the MUSIIC Lab are working on projects focused on motion tracking in ultrasound images. Projects include: ultrasound elastography using a dynamic programming approach and ultrasound segmentation and 3D reconstruction. These advanced ultrasound imaging techniques are used in medical applications in breast, liver and prostate cancer, for monitoring and interventions.

![]()

Articulated Object Tracking

Many objects encountered in the real world can be described as kinematic chains of parts with roughly uniform appearance characteristics. We developed a GPU-accelerated method for tracking such objects in single- or multi-channel (eg, stereo) video streams in diverse domains. The method consists, in brief, of modeling the appearance of the various object parts, then rendering a 3D model of the target object geometry from each view, and measuring the consistency of the resulting image with an appearance class probability map derived from the video images. It’s been demonstrated in both surgical and generic settings.

Real-time Video Mosaicking with Adaptive Parametrized Warping

Image registration or alignment for video mosaicing has many research and real applications. Our motivations come primarily from the medical field, and primarily seek to over come fundamental field of view and resolution tradeoffs that occur ubiquitously in endoscopic surgery. There are two general approaches to computing the visual motions between successive images, the critical issue for registration. Direct approaches use all the image pixels available to compute an image-based error, which is then optimized. Complementary approaches are to specifically detect certain image features first then to estimate the corresponding relations between image feature pairs in different camera views. The latter often has the advantage of a larger range of convergence, although at the cost of a prior feature-detection and correspondence stage.

Human Machine Collaborative Systems

We are developing techniques for designing systems that amplify or assist human physical capabilities when performing tasks that require learned skills, judgement and dexterity. We refer to these systems as Human-machine Collaborative Systems (HMCS), as they generally seek to combine human judgement and experience with physical and sensory augmentation using advanced display and robotic devices.

Motion Compensation in Cardiovascular MR Imaging

Robust MR imaging of the coronary arteries is challenging due to the complex motion of these arteries induced by both respiratory and cardiac motion. Current approaches have had limited success due to the motion artifacts introduced by the variability in cardiac and respiratory motion during data acquisition. The effects of motion variability can be significantly reduced if the motion of the coronary artery can be estimated and used to guide MR image acquisition. We thus propose a method that involves acquiring high speed low-resolution images in specific orientations, extracting coronary motion to guide the high-resolution MR image acquisition. In order to show the feasibility of the proposed approach, we present and validate a multiple template tracking approach that allows reliable and accurate tracking of the left coronary artery (LCA) in low-resolution realtime MR image sequences in different orientations. We have also demonstrated using MR simulations that accounting for cardiac variability improves overall image quality.

Multi-Modality Retinal Image Registration

Optical coherance tomography is a non-invasive imaging modality analogous to ultrasound using light rays. Registration of pre-operative OCT images to more familiar and easily available intra-operative fundus images allows precise location of pathologies which might otherwise be invisible, allowing a wider array of interventions.

Vision-Based Human Computer Interaction

The Visual Interaction Cues (VICs) project is focused on developing new techniques for vision-based human computer interaction (HCI). The VICs paradigm is a methodology for vision-based interaction operating on the fundamental premise that, in general vision-based HCI settings, global user modeling and tracking are not necessary.

Image Segmentation

We have proposed a subspace labeling technique for global Image segmentation in a particular feature subspace is a fairly well understood problem.

XVision Real-Time Tracking System

XVision provides an application independent set of tools for visual feature tracking optimized to be simple to configure at the user level, yet extremely fast to execute.