Announcement

- 3/14/2018 JHUSEQ-25 has been upgraded and submitted to ECCV 2018. [Update: 7/25/2018 new JHUSEQ-25 ECCV accepted]

- 9/26/2017 Object segmentation masks and python reader are provided for JHUSEQ-25!

- 1/23/2017 Textured meshes of hand tools used in JHUSecne-50 and JHUSEQ-25 are available!

- 1/17/2017 Download packages of JHUSEQ-25 is available!

- 2/30/2017 JHUSEQ-25 has been submitted to IROS 2017. [Update: 6/15/2017 JHUSEQ-25 IROS 2016 accepted]

- 5/13/2016 Download packages of JHUScene-50 is available!

- 9/15/2015 JHUScene-50 has been submitted to ICRA 2016. [Update: 1/15/2016 JHUScene-50 ICRA 2016 accepted]

- 8/1/2015 Download packages of JHUIT-50 and LN-66 are available!

- 3/1/2015 LN-66 has been submitted to ISRR 2015. [Update: 4/15/2015 LN-66 ISRR 2015 accepted]

- 11/15/2014 JHUIT-50 has been submitted to CVPR 2015. [Update: 1/15/2015 JHUIT-50 CVPR accepted]

Introduction

The JHU Visual Perception Datasets (JHU-VP) contain benchmarks for object recognition, detection and pose estimation using RGB-D data. In particular, these datasets are designed to evaluate perception algorithms in typical robotics environments. Currently, the releases of the data include a SLAM-based scene understanding dataset JHUSEQ-25[1], a pose estimation dataset JHUScene-50[2], an object classification benchmark JHUIT-50[3] and a scene dataset LN-66[4]. PrimeSense Carmine 1.09 depth sensor (short range) was used to capture RGB-D data. The calibrated camera parameters can be downloaded from here. More data will be coming very soon!

For each RGB-D data, we provide a point cloud in pcd format with each point type as pcl::PointXYZRGBA, a corresponding depth map, a RGB image and a binary object mask. Please refer to PCL library for more information about the pcd file.

Textured meshes of the hand tools used in JHUSecne-50 and JHUSEQ-25 can be separately downloaded from here.

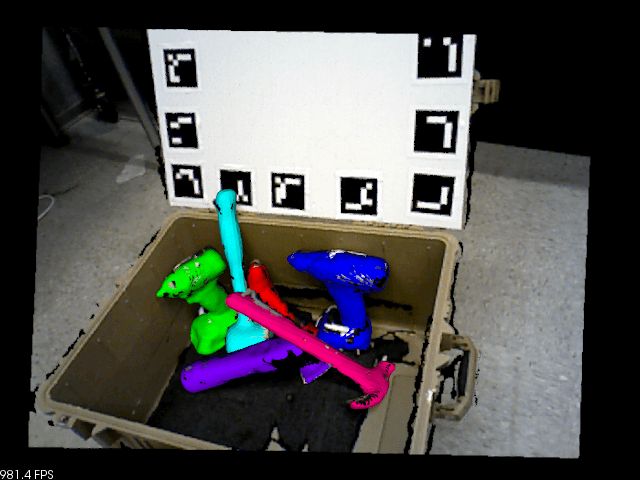

JHUSEQ-25:

25 RGB-D video sequences of office scenes captured at 20Hz ~30Hz.

25 RGB-D video sequences of office scenes captured at 20Hz ~30Hz.- >400 frames per video.

- 6-DoF pose groundtruth is provided for 10 hand tools in each video.

- Ideally used for testing SLAM-based recognition methods

JHUScene-50:

- This dataset contains 50 indoor scenes, 5000 testing frames and 22520 labeled poses for 10 common hand tools used in mechanical operations. More details can be found in [1].

- We provide a simple C++ program to interact the dataset based on the PCL library .

- For each object, an CAD model and 900 real partial views with groundtruth segmentation are provided.

- Textured CAD models are coming soon.

JHUIT-50:

- This dataset contains 50 industrial objects (shown in right figure) and hand tools used in mechanical operations. More details can be found in [2].

- The training and testing data are captured in non-overlapping viewpoints. This benchmark is good for testing the robustness of your algorithm to 3D rotation.

- The prefix of each file is formatted as “{object name}_{seq id}_{frame id}” in which “seq id” is ranging from 0-6. Training data for each object corresponds to the file with “seq id”<4 and the remaining is test data.

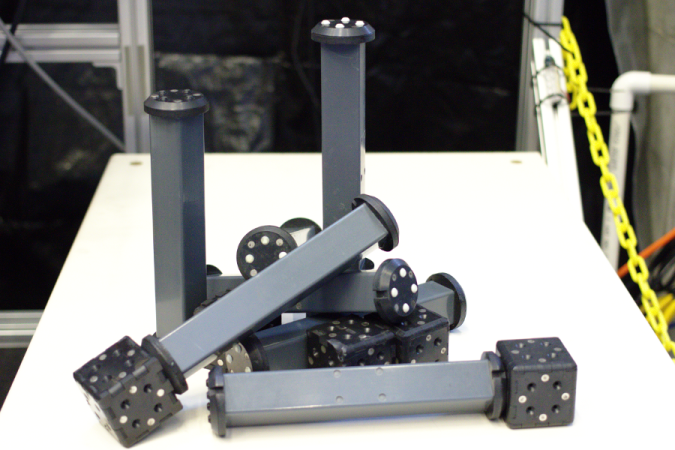

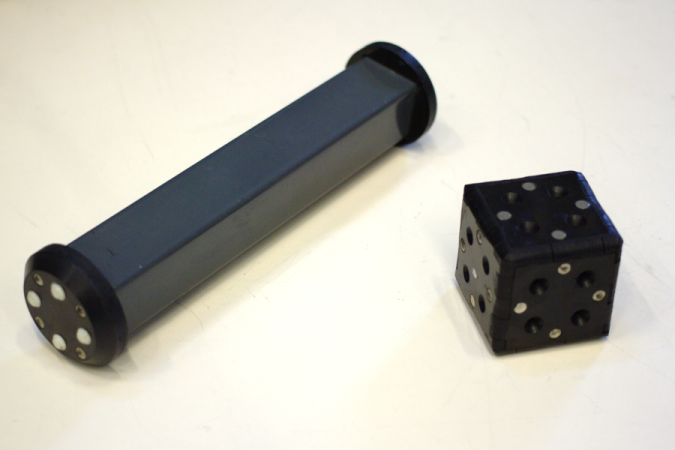

LN-66:

This scene dataset contains 614 testing scene examples with various complex configurations of the “link” and “node” objects. Both objects can also be found in JHUIT-50. More details can be found in [3]. The right figure shows the “link” and “node” objects and an example scene.

This scene dataset contains 614 testing scene examples with various complex configurations of the “link” and “node” objects. Both objects can also be found in JHUIT-50. More details can be found in [3]. The right figure shows the “link” and “node” objects and an example scene.- The downloaded package includes raw scene point clouds formatted as “ln_{frame id}.pcd” in folder LN_66_scene, object pose groundtruth formatted as “{object name}_gt_{frame id}.csv” in folder LN_66_gt and object data in folder training_objects.

- We provide object mesh files in two formats (.obj) and (.vtk). There is no color info in object mesh for now.

Downloads

** This dataset is released for the purpose of academic research, not for commercial usage. **

References

If you use the object database JHUIT-50 and scene dataset LN-66, or refer to their results, please cite the following papers accordingly:

- Chi Li, Jin Bai and Gregory D. Hager. A Unified Framework for Multi-View Multi-Class Object Pose Estimation, In European Conference on Computer Vision (ECCV), 2018. [pdf]

- Chi Li, Han Xiao, Keisuke Tateno, Federico Tombari, Nassir Navab and Gregory D. Hager. Incremental Scene Understanding on Dense SLAM, In International Conference on Intelligent Robots and Systems (IROS), 2016. [pdf]

- Chi Li, Jonathan Bohren, Eric Carlson and Gregory D. Hager. Hierarchical Semantic Parsing for Object Pose Estimation in Densely Cluttered Scenes, In International Conference on Robotics Automation (ICRA), 2016. [pdf]

- Chi Li, Austin Reiter and Gregory D. Hager. Beyond Spatial Pooling: Fine-Grained Representation Learning in Multiple Domains, In Computer Vision and Pattern Recognition (CVPR), 2015. [pdf]

- Chi Li, Jonathan Boheren and Gregory D. Hager. Bridging the Robot Perception Gap With Mid-Level Vision, In International Symposium on Robotics Research (ISRR), 2015. [pdf]

Acknowledgements

Credits to Chi Li, Jonathan Boheren, Jin Bai, Felix Jonathan, Hanyue Liang, Chris Paxton, Anni Zhou, Zihan Chen, Han Xiao, Yuanwei Zhao, Bo Liu and Eric Carlson for collecting data and developing the data-collection software.

Special acknowledgement to Gregory D. Hager and Austin Reiter for their support and suggestions in building this dataset.

This dataset is funded by the National Science Foundation under Grant No. NRI-1227277.

Feedback

Please let us know your questions, comments and suggestions via chi_li [at] jhu [dot] edu. We appreciate your feedback!