CIRL currently supports four main thrusts in the area of human-machine collaborative systems: systems for collaboration, object recognition and scene understanding, fine-grained action recognition, and deep learning of robot trajectories from expert demonstrations. Many of these projects were funded by the NSF National Robotics Initiative grant Multilateral Manipulation by Human-Robot Collaborative Systems.

CoSTAR: The Collaborative System for Task Automation and Recognition

Traditional robot manipulators are prohibitively costly for many small lot manufacturing entities (SMEs) companies to due to their rapidly changing assembly lines and aging equipment with highly variable performance. We aim to simplify robot integration, improve efficiency of collaborative industrial robot systems, and enable their application in complex, dynamic environments.

Our CoSTAR system provides a powerful visual programming interface to a broad range of robot capabilities. We demonstrated the power of this system at the KUKA Innovation Award competition at Hannover Messe 2016, where we won a €20,000 prize over 25 other entrants and 5 other finalist teams.

Related Projects

- The CoSTAR System

- IVRE: An Immersive Virtual Robotics Environment

- Improved Collaboration with Industrial Robots

- ASCENT – Augmented Shared Control for Efficient and Natural Teleoperation

- Assistive Technologies for Quadriplegics

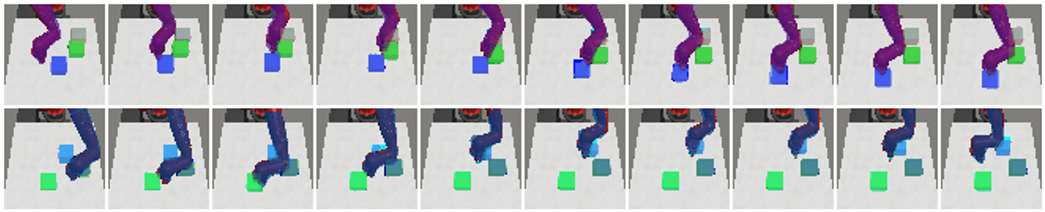

Object Recognition and Scene Understanding

Visual perception is crucial for many robotics applications such as autonomous navigation, home service robots, and industrial manufacturing. However, most methods are not robust to difficult scenarios involving densely cluttered scenes, heavy object occlusions and large intra-class object variation. We look at problems including object classification, semantic segmentation and 6-DoF object pose estimation.

Related Projects

- JHU CoSTAR Block Stacking Dataset

- Multi-Domain Pooling for Robust Object Instance Classification

- Hierarchical Semantic Parsing for Object Pose Estimation in Densely Cluttered Environments

- JHU Visual Perception Datasets

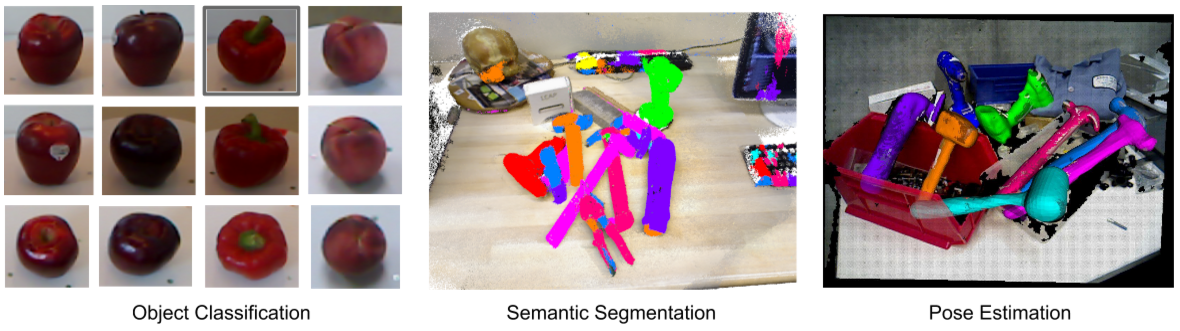

Fine-grained Action Analysis

The ability to automatically recognize a sequence of actions is important for applications ranging from collaborative manufacturing to surgical skill assessment. In this work we jointly temporally segment and classify a sequence of actions in situated settings using sensor data (e.g. robot kinematics, accelerometer data) and video.

Related Projects

- Segmental Spatio-Temporal CNNs for Fine-grained Action Segmentation

- Learning Convolutional Action Primitives for Fine-grained Action Recognition

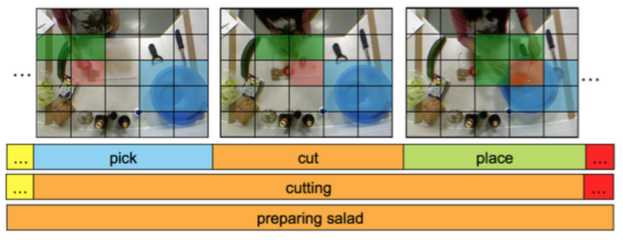

Planning with Knowledge Learned from Expert Demonstrations

In order for robots to be able to solve difficult problems, they must be able to learn from human experts. Humans expect robots to solve tasks in ways that make intuitive sense to them. We propose to give robots this intuition through algorithms for combined task and motion planning using a combination of knowledge learned from watching humans perform tasks and through exploration.

In this work, we propose methods for grounding robot actions based on human demonstrations. We then describe the algorithms necessary to create task plans that sequence these actions to accomplish high-level goals.

Related Projects

Robot manipulation from pixels

In this project, we investigate how to complete robot manipulation tasks with only visual inputs. We propose to extract object-centric representations from raw images and then model the interactive relationships among them. Robot pose estimation is also studied.